Fireworks¶

FireWorks is a free, open-source code for defining, managing, and executing complex scientific workflows.

Strengths of FireWorks¶

- Well-suited to NERSC-- we have many FireWorks users

- Easy to install via conda

- Server/worker model is inherently load-balancing

- Extremely flexible and able to handle complex job structures

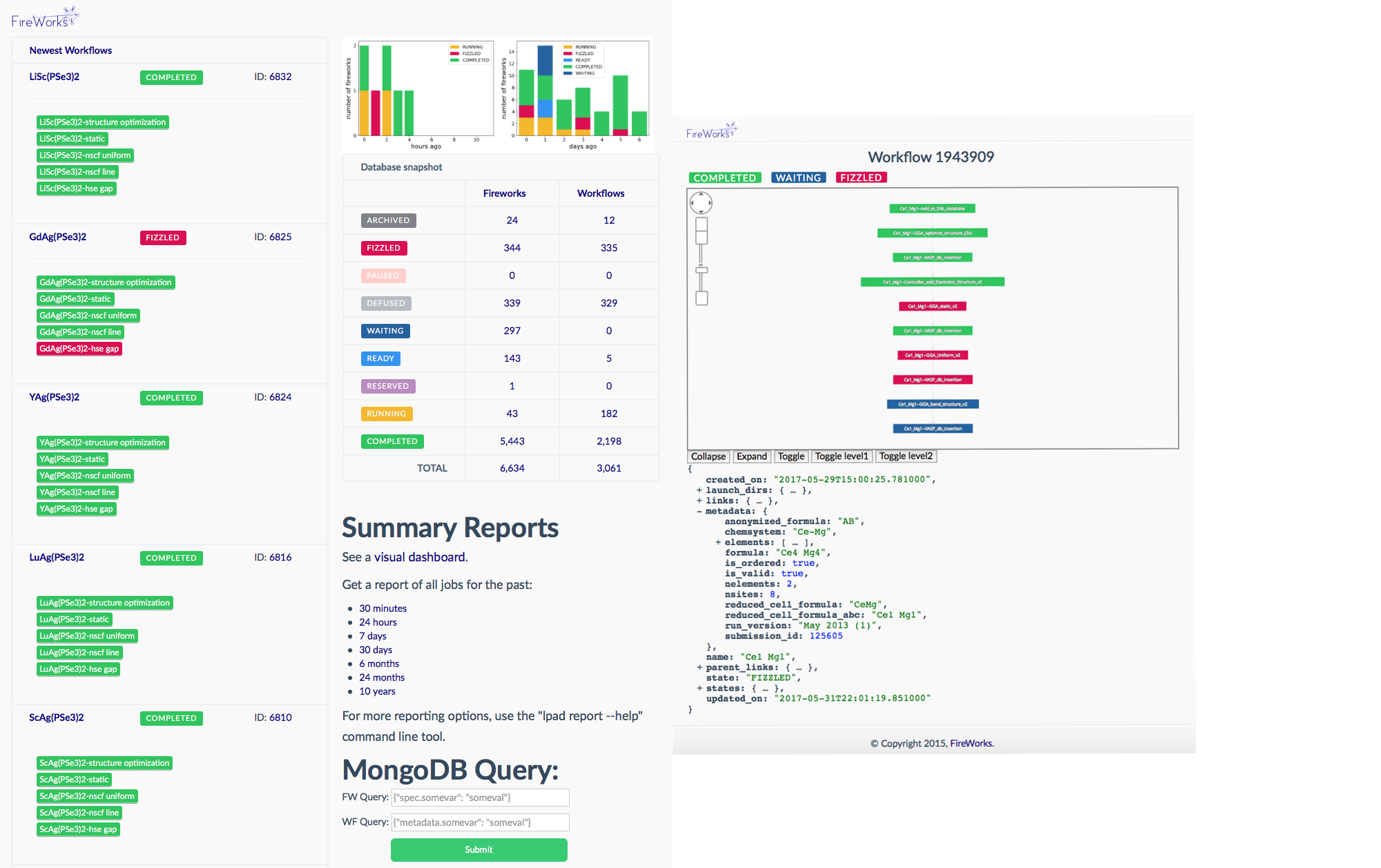

- Friendly dashboard to display workflow status

- Can handle jobs that contain MPI

- Interfaces well with SLURM

- Can run jobs on CPU or GPU

Disadvantages of FireWorks¶

- Requires a database which must be set up by NERSC staff (contact help.nersc.gov)

- FireWorks requires some time to learn

Overview of FireWorks¶

FireWorks uses a centralized server model where the server manages the workflows and workers run the jobs. It can handle complex job dependencies, restart jobs that have died, and track the workflow status on a friendly dashboard. It can be used via bash scripting or via a Python API. We will cover using FireWorks via bash shell here. You can find more information in the official FireWorks documentation.

To use FireWorks you must first request a mongoDB database. Please use the database creation form here to request your mongoDB database.

If you need more help with FireWorks itself please open a separate ticket with NERSC consulting at help.nersc.gov.

Terminology¶

FireWorks uses a number of terms to describe the different parts of the workflow manager:

- LaunchPad/FireServer: MongoDB that controls the workflow. It contains all the tasks to be run, and whether they have run successfully etc.

- FireTask: computing tasks to be performed.

- FireWork: list of FireTasks.

- Rocket: fetches a FireWork from the LaunchPad and runs it. Could be run on a separate machine (FireWorker) or through a batch system (in this case, SLURM).

Creating your Fireworks Environment¶

We recommend that anyone wanting to use FireWorks at NERSC install it in a conda environment.

Create a new conda environment for FireWorks

module load python

conda create -n fireworks python=3.8 -y

source activate fireworks

conda install -c conda-forge fireworks

For more information about using conda environments at NERSC, check out this page.

Setting up your LaunchPad¶

Setting up the Launchpad can be done interactively using the command lpad init, similar to the example shown below. You will need to specify the name of the database host and your own username and password - note that you need to have admin access to the database, so make sure you use the administrators username/password with which you were provided.

On a Cori login node, navigate to the directory where you will issue your fireworks commands. In this example, we are working from $HOME/fw_test. Make sure you have initialized your FireWorks environment, for example via source activate fireworks.

How to configure your LaunchPad

lpad init

Please supply the following configuration values

(press Enter if you want to accept the defaults)

Enter host (default: localhost) : mongodb03

Enter port (default: 27017) :

Enter name (default: fireworks) : my_db

Enter username (default: None) : my_db_admin

Enter password (default: None) : my_pswd

This information will go into a file named "my_launchpad.yaml". This file should be located in the directory where you are issuing FireWorks commands.

Basic example¶

Below is an example of how to use FireWorks at NERSC. This is based heavily on the Fireworks tutorial which you will find here.

spec:

_tasks:

- _fw_name: ScriptTask

script: echo "howdy, your job launched successfully!" >> howdy.txt

Here all we do is write a file called howdy.txt, but in your real workload you can substitute in the name of your script.

Singleshot example

Now let's add our fw_test.yaml to the launchpad and run it:

lpad add fw_test.yaml

rlaunch singleshot

The output should look like:

(fireworks) stephey@cori10:~/fw_test> rlaunch singleshot

2021-02-24 19:57:04,135 INFO Hostname/IP lookup (this will take a few seconds)

2021-02-24 19:57:04,136 INFO Launching Rocket

2021-02-24 19:57:04,223 INFO RUNNING fw_id: 1 in directory: /global/u1/s/stephey/fw_test

2021-02-24 19:57:04,232 INFO Task started: ScriptTask.

2021-02-24 19:57:04,240 INFO Task completed: ScriptTask

2021-02-24 19:57:04,257 INFO Rocket finished

Rapidfire example

One task isn't very interesting. Let's try again with two tasks:

lpad add fw_test.yaml

lpad add fw_test.yaml

Note: adding the FireTask again will result in a second, identical job. Now let's run in rapidfire mode instead of singleshot mode:

rlaunch rapidfire

In this mode, FireWorks will launch tasks until it runs out. The output data (output files and job status) is given in launcher_* directories.

The output should look like:

(fireworks) stephey@cori07:~> rlaunch rapidfire

2021-02-22 13:18:26,274 INFO Hostname/IP lookup (this will take a few seconds)

2021-02-22 13:18:26,351 INFO Created new dir /global/u1/s/stephey/launcher_2021-02-22-21-18-26-349075

2021-02-22 13:18:26,351 INFO Launching Rocket

2021-02-22 13:18:26,370 INFO RUNNING fw_id: 2 in directory: /global/u1/s/stephey/launcher_2021-02-22-21-18-26-349075

2021-02-22 13:18:26,378 INFO Task started: ScriptTask.

2021-02-22 13:18:26,385 INFO Task completed: ScriptTask

2021-02-22 13:18:26,402 INFO Rocket finished

2021-02-22 13:18:26,406 INFO Created new dir /global/u1/s/stephey/launcher_2021-02-22-21-18-26-406464

2021-02-22 13:18:26,406 INFO Launching Rocket

2021-02-22 13:18:26,423 INFO RUNNING fw_id: 3 in directory: /global/u1/s/stephey/launcher_2021-02-22-21-18-26-406464

2021-02-22 13:18:26,428 INFO Task started: ScriptTask.

2021-02-22 13:18:26,436 INFO Task completed: ScriptTask

2021-02-22 13:18:26,453 INFO Rocket finished

Submitting FireWorks jobs via SLURM¶

You are probably most interested in how to use FireWorks to launch jobs at NERSC via SLURM. We will use an example to demonstrate how this works. You can read more about how to launch FireWorks jobs in a queue in the official FireWorks documentation.

You will need the following:

- A queue-adapter for SLURM,

my_qadapter.yaml - The script you want to run, wrapped in

fw_test.yaml

You will also need to be in the directory where you have configured your LaunchPad (i.e. did lpad init). You can always move your my_launchpad.yaml file or re-configure your launchpad.

Here is an example of the SLURM queue adapter. You can copy this and save it as my_qadapter.yaml.

_fw_name: CommonAdapter

_fw_q_type: SLURM

rocket_launch: rlaunch -l my_launchpad.yaml rapidfire

ntasks: 1

cpus_per_task: 1

ntasks_per_node: 1

walltime: '00:02:00'

queue: debug

constraint: haswell

account: null

job_name: null

logdir: null

pre_rocket: null

post_rocket: null

You can read more about FireWorks queue adapters here.

Launching a FireWorks job via SLURM

lpad reset

lpad add fw_test.yaml

qlaunch singleshot

The result should look something like:

(fireworks) stephey@cori10:~/fw_test> qlaunch singleshot

2021-02-22 21:16:43,317 INFO moving to launch_dir /global/u1/s/stephey/fw_test

2021-02-22 21:16:43,318 INFO submitting queue script

2021-02-22 21:16:45,847 INFO Job submission was successful and job_id is 39771362

This means your my_fwork.yaml was submitted successfully to SLURM.

To submit more tasks with a 3 second pause between them, you can do the following:

lpad reset

lpad add fw_test.yaml

lpad add fw_test.yaml

lpad add fw_test.yaml

qlaunch rapidfire -m 3

To submit tasks in "infinite mode" which maintains 2 jobs in the queue until the LaunchPad is empty:

lpad reset

lpad add fw_test.yaml

lpad add fw_test.yaml

lpad add fw_test.yaml

lpad add fw_test.yaml

qlaunch rapidfire -m 2 --nlaunches infinite

You can find more information about launching FireWorks jobs via a queue here.

For information about how to check the status of your FireWorks tasks or workflows, please see here.

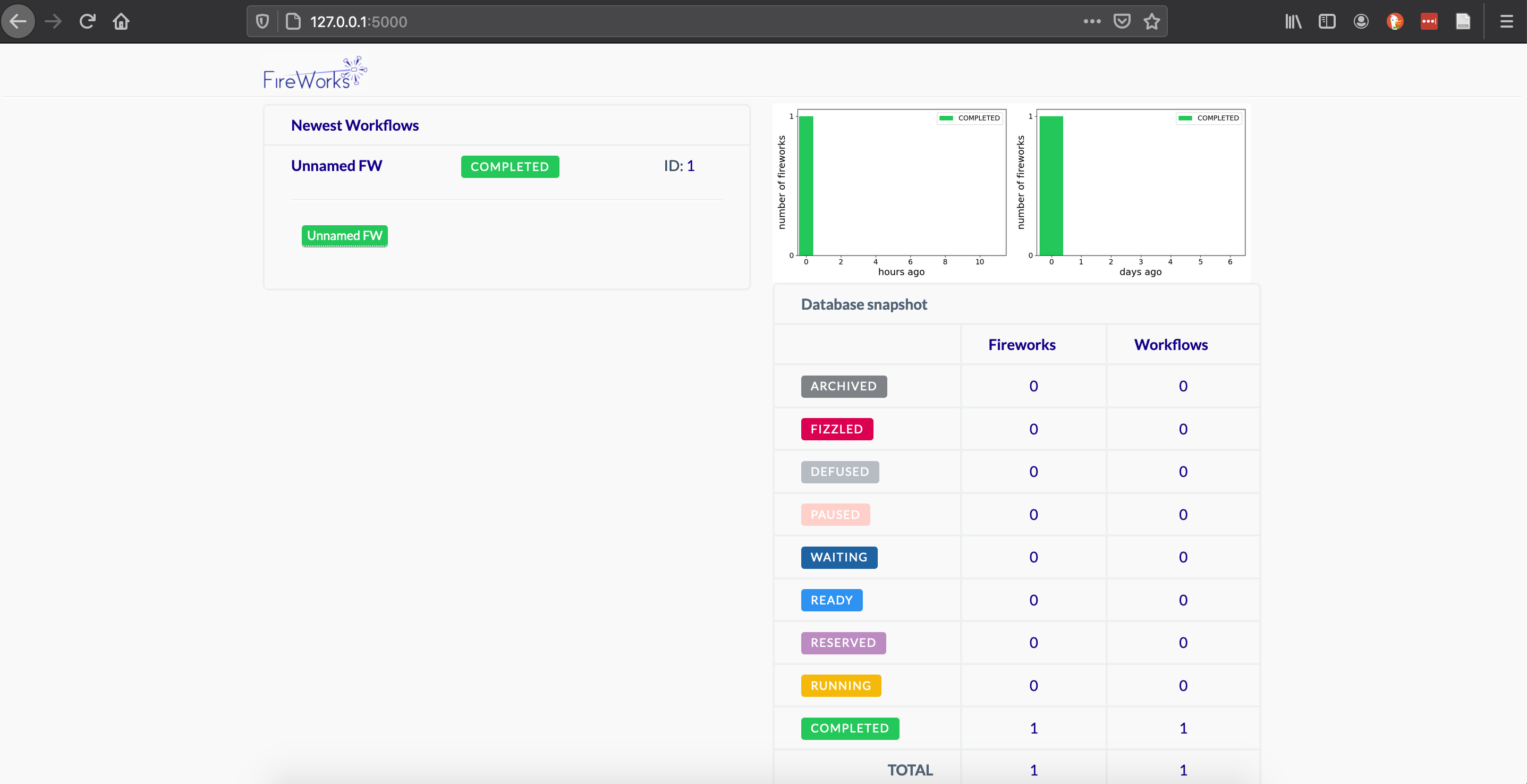

Display the FireWorks dashboard¶

The FireWorks dashboard can be viewed on your laptop/desktop if you connect to Cori with ssh port forwarding. For example, connecting using an sshproxy key:

ssh -L 5000:localhost:5000 -i ~/.ssh/nersc cori.nersc.gov

Once you're on Cori, navigate to the directory where you have initialized your launchpad and start up the FireWorks webgui:

module load python

source activate fireworks

cd $HOME/fw_test

lpad webgui

This will open a summary-- you can hit q to exit. Your console should display

(fireworks) stephey@cori06:~/fw_test> lpad webgui

* Serving Flask app "fireworks.flask_site.app" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

Leave this window running. You can CTRL+C to kill your FireWorks dashboard when you're done.

Since you've forwarded port 5000 from Cori to port 5000 on your local machine, you can open a browser and navigate to

http://127.0.0.1:5000/

You should be able to see and interact with the FireWorks dashboard tracking your jobs on Cori.

Running a FireWorks GPU job via SLURM¶

Not all NERSC users can do this example

This FireWorks GPU example is for users with corigpu access only. It may also be useful to anyone preparing to run their FireWorks code on Perlmutter.

Prepare our FireWorks conda environment for GPU work

module load cgpu

module load cuda/11.1.1

module load python

source activate fireworks

pip install cupy-cuda111

GPU CuPy program we'll run cupy_eigh.py

import numpy as np

import cupy as cp

def cupy_eigh(input_data,precision):

x = cp.asarray(input_data, dtype=precision)

w,v = cp.linalg.eigh(x)

#move back to cpu

w_cpu = cp.asnumpy(w)

return w_cpu

asize = 1000

rng = np.random.default_rng(seed=42)

randarray = rng.random((asize, asize))

input_data = randarray

precision = 'float64'

w_cpu = cupy_eigh(input_data, precision)

print(w_cpu)

We'll adjust our FireWork fw_test_gpu.yaml to run our GPU test workload:

spec:

_tasks:

- _fw_name: ScriptTask

script: srun -n 1 python cupy_eigh.py

You'll need to change your my_qadapter.yaml to allocate gpu resources.

_fw_name: CommonAdapter

_fw_q_type: SLURM

rocket_launch: rlaunch -l my_launchpad.yaml rapidfire

constraint: gpu

ntasks: 1

cpus_per_task: 10

gpus_per_task: 1

account: m1759

walltime: '00:02:00'

queue: gpu_regular

job_name: null

logdir: null

pre_rocket: null

post_rocket: null

Edit the FireWorks SLURM template to include the SLURM GPU settings

The template is located at:

$CONDA_PREFIX/lib/python3.8/site-packages/fireworks/user_objects/queue_adapters/SLURM_template.txt

You'll need to add the line #SBATCH --gpus-per-task=$${gpus_per_task}:

#!/bin/bash -l

#SBATCH --nodes=$${nodes

#SBATCH --ntasks=$${ntasks}

#SBATCH --ntasks-per-node=$${ntasks_per_node}

#SBATCH --ntasks-per-core=$${ntasks_per_core}

#SBATCH --core-spec=$${core_spec}

#SBATCH --exclude=$${exclude_nodes}

#SBATCH --cpus-per-task=$${cpus_per_task}

#SBATCH --gpus-per-task=$${gpus_per_task}

#SBATCH --gres=$${gres}

#SBATCH --qos=$${qos}

#SBATCH --time=$${walltime}

#SBATCH --time-min=$${time_min}

#SBATCH --partition=$${queue}

#SBATCH --account=$${account}

#SBATCH --job-name=$${job_name}

#SBATCH --license=$${license}

#SBATCH --output=$${job_name}-%j.out

#SBATCH --error=$${job_name}-%j.error

#SBATCH --constraint=$${constraint}

#SBATCH --signal=$${signal}

#SBATCH --mem=$${mem}

#SBATCH --mem-per-cpu=$${mem_per_cpu}

#SBATCH --mail-type=$${mail_type}

#SBATCH --mail-user=$${mail_user}

$${pre_rocket}

cd $${launch_dir}

$${rocket_launch}

$${post_rocket}

# CommonAdapter (SLURM) completed writing Template

Run our gpu test program in singleshot mode

lpad rest

lpad add fw_test_gpu.yaml

qlaunch singleshot

The output should look like:

(fireworks) stephey@cori10:~/fw_test> qlaunch singleshot

2021-02-24 21:32:10,943 INFO moving to launch_dir /global/u1/s/stephey/fw_test

2021-02-24 21:32:10,953 INFO submitting queue script

2021-02-24 21:32:11,084 INFO Job submission was successful and job_id is 1527948

If you have any questions or problems using FireWorks at NERSC, please contact us at help.nersc.gov.