The sparse multifrontal solver can optionally use Hierarchically Semi-Separable, rank-structured matrices to compress the fill-in. In the multifrontal method, computations are performed on dense matrices called frontal matrices. A frontal matrix can be approximated as an HSS matrix, but this will only be beneficial (compared to storing the frontal as a standard dense matrix and operating on it with BLAS/LAPACK routines) if the frontal matrix is large enough.

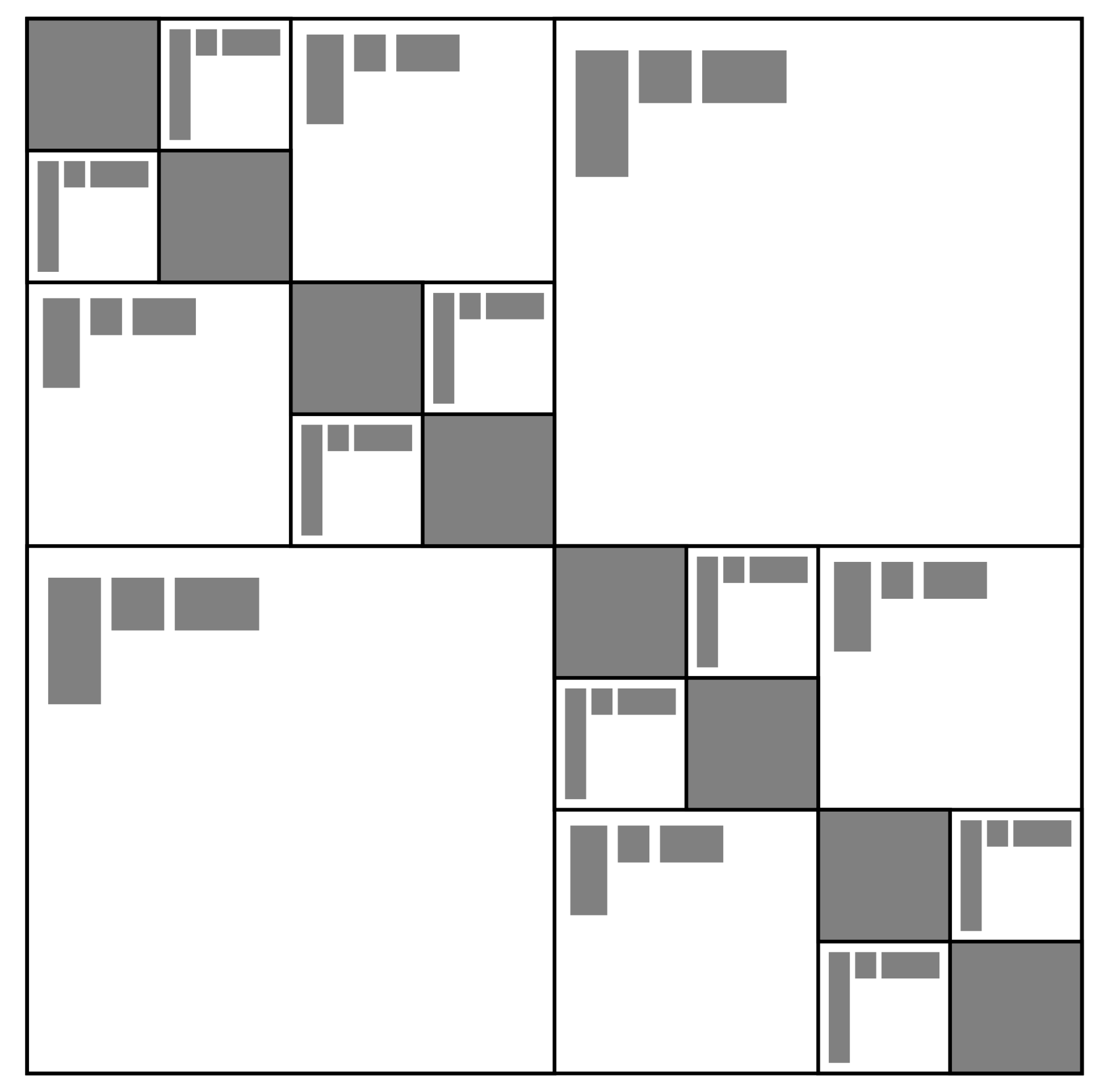

Figure 3 illustrates the HSS matrix format. The matrix is partitioned as a 2x2 block matrix, with the partitioning recursively applied on the diagonal blocks, until diagonal blocks are smaller than a specified leaf size. The off-diagonal block on each level of the hierarchy are approximated by a low-rank product. This low-rank storage format asymptotically reduces memory usage and floating point operations, while introducing approximation errors. HSS compression is not used by default in the STRUMPACK sparse solver (the default is to perform exact LU factorization), but can be turned on/off via the command line:

or via the C++ API as follows

When compression is enabled, the default STRUMPACK behavior is to use the approximate LU factorization as a preconditioner within GMRES. This behavior can also be changed, see Solve.

However, HSS compression has a considerable overhead and only pays off for sufficiently large matrices. Therefore STRUMPACK has a tuning parameter to specify the minimum size a dense matrix needs to be to be considered a candidate for HSS compression. The minimum dense matrix size for HSS compression is set via the command line via

or via the C++ API as follows

The routine set_compression_min_sep_size(int s) refers to the size of the top-left block of the front only. This top-left block is the part that corresponds to a separator, as given by the nested dissection reordering algorithm. This top-left block is also referred to as the block containing the fully-summed variables. Factorization (LU in the dense case, ULV in the HSS case) is only applied to this top-left block. Tuning the value for the minimum separator size can have a big impact on performance and memory usage!

The above options affect the use of HSS within the multifrontal solver. There are more, HSS specific, options which are stored in an object of type HSS::HSSOptions<scalar>. An object of this type is stored in the SPOptions<scalar> object stored in the StrumpackSparseSolver. It can be accessed via the HSS_options() routine as follows:

In STRUMPACK, HSS matrices are constructed using a randomized sampling algorithm [6]. To construct an HSS approximation for a matrix A, sampling of the rows and columns of A is computed by multiplication with a tall and skinny random matrix R as follows: S^r = AR and S^c = A^*R. Ideally, the number of columns in the matrix R is d = r + p, with r the maximum off-diagonal block rank in the HSS matrix and p a small oversampling parameter. Unfortunately, the HSS rank is not known a-priori, so it needs to determined adaptively. The adaptive sampling scheme used in STRUMPACK starts with an initial number of random vector d_0, and increases this in steps of \Delta d, until the compression quality reaches the desired user specified tolerance, or until the maximum rank is reached. The compression tolerances can greatly impact performance. They can be set using:

or via the C++ API

Other options are available to tune for instance the initial number of random vectors d_0, the increment \Delta d, the random number generator or the random number distribution. See the documentation of HSSOptions<scalar> for more detailed information. The corresponding HSS specific command line options are: