We recommend to use the strumpack::structured::StructuredMatrix interface instead of directly using the HSS classes. See Dense Solvers .

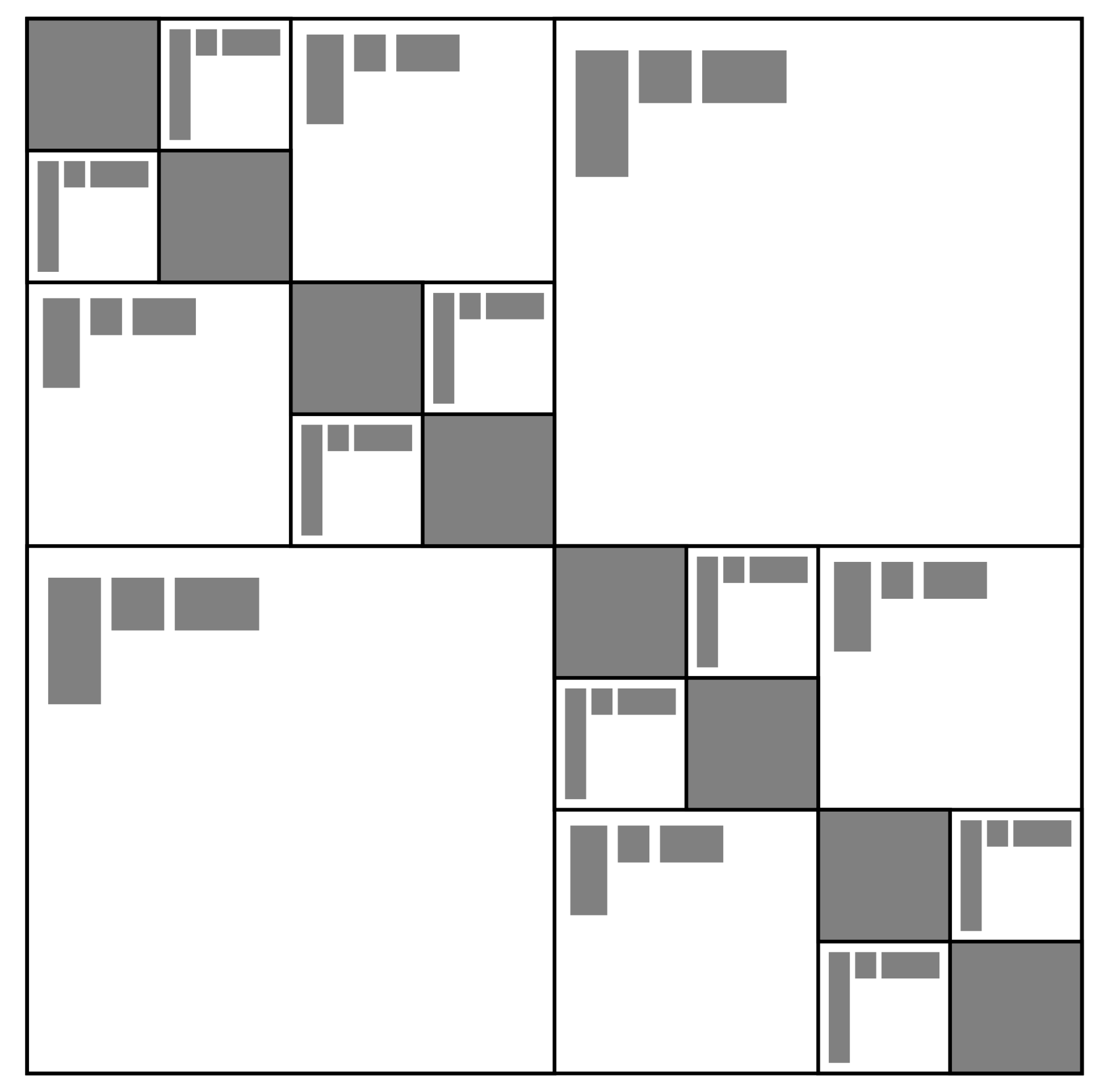

Figure 3: Illustration of a Hierarchically Semi-Separable (HSS) matrix. Gray blocks are dense matrices. Off- diagonal blocks, on different levels of the HSS hierarchy, are low-rank. The low-rank factors of off-diagonal blocks of different levels are related.

The HSS include files are installed in the include/HSS/ subdirectory, or in src/HSS/. All HSS code is in the namespace strumpack::HSS. The class for a sequential/multithreaded HSS matrix is strumpack::HSS::HSSMatrix, while the distributed memory HSS class is strumpack::HSS::HSSMatrixMPI. For examples of the usage of these classes, see the test code in test/test_HSS_seq.cpp and test/test_HSS_mpi.cpp respectively. There is also one sequential example in examples/dense/KernelRegression.cpp, which uses HSS compression for kernel matrices as used in certain machine learning applications, see below, and see for instance [2].

We use a simple wrapper class strumpack::DenseMatrix, as a wrapper around a column-major matrix. See the documentation for that class for more info.

There are currently two ways to construct an HSS matrix:

The interfaces to construct HSS matrices in these two different ways are detailed below.

Unfortunately, many applications do not fit in the two cases listed above, i.e., you cannot build the dense matrix first (too expensive), or you do not have a fast matrix vector product or fast acces to individual elements. We have experimental code to construct an HODLR matrix representation for those cases, see HODLR .

The clustering of the HSS matrix is defined by a strumpack::structured::ClusterTree. The strumpack::structured::ClusterTree uses a recursive representation, a child in the tree is also a strumpack::structured::ClusterTree. A node in this tree should always have 0 or 2 children, and the size of the tree should always be the sum of the sizes of its children. To build a whole tree use the simple constructor to specify the size n of the corresponding HSS matrix, then refine it.

To refine uniformly to a given leaf size is reached use:

or refine it manualy by adding nodes to the child vector strumpack::structured::ClusterTree::c:

Alternatively, if you have access underlying geometry that is used to construct the matrix, you can use one of the clustering algorithms, see binary_tree_clustering().

See the class strumpack::HSS::HSSMatrix, which is a subclass of the abstract class strumpack::HSS::HSSMatrixBase.

HSS matrix construction from an explicit dense matrix can be done as follows:

Alternatively, to construct an HSS without first building the whole dense matrix, you need to define a matrix-vector multiplication routine and an element extraction routine. The matrix-vector product should have t the following signature:

where Rr and Rc are random matrices (set by the HSS compression code), and the routine should fill in Sr and Sc. This can be a functor, or a lambda function for instance. The random sample matrix Sr should be computed by the matrix-(multiple)vector multiplication routine as A*Rr. The Sr matrix will aready be allocated by the compression routine and should satisfy Sr.rows() == A.rows() and Sr.cols() == Rr.cols(). The random sample matrix Sc should be computed by the matrix-(multiple)vector multiplication routine as A^T*Rc, or A^C*Rc. This will aready be allocated by the compression routine and should be Sc.rows() == A.cols() and Sc.cols() == Rc.cols().

And the element extraction routine should have the signature:

where the user is responsible for computing the elements A(I,J) and putting them into the matrix B. B will already be allocated and is of size I.size() x J.size().

The HSS construction would look as follows:

We have an optimized HSS construction algorithm for the so called kernel matrices, which arise in several applications, such as kernel ridge regression in machine learning. One can use the strumpack::HSS::HSSMatrix constructor:

However, for kernel ridge regression, the strumpack::kernel::Kernel class provides some easy to use driver routines, see

There is also a Python interface to these Kernel regression routines, compatibile with scikit-learn, see install/python/STRUMPACKKernel.py and examples/dense/KernelRegression.py.

Currently the class strumpack::HSS::HSSMatrixMPI cannot be used for on a communicator with a single MPI process. In that case, one should use the sequential strumpack::HSS::HSSMatrix class.