The following shows the typical way to use a (sequential or multithreaded) STRUMPACK sparse solver:

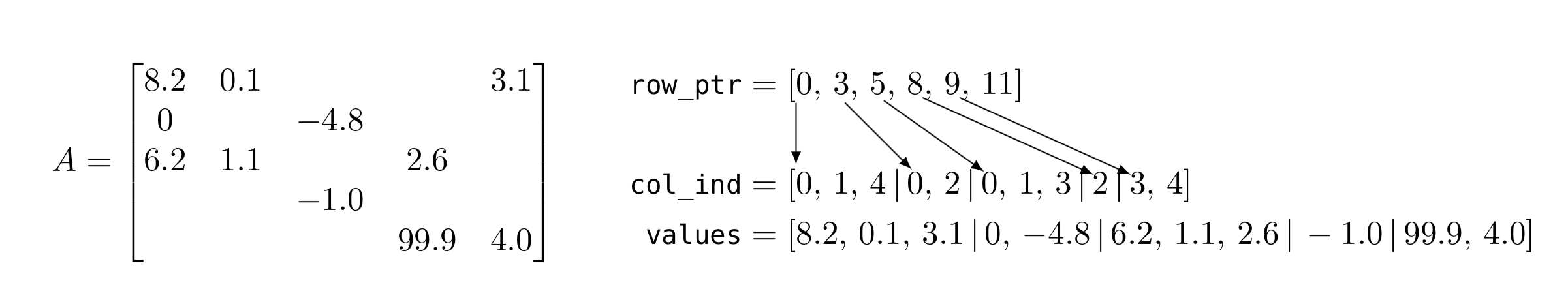

Figure 1: Illustration of a small 5 × 5 sparse matrix with 11 nonzeros and its Compressed Sparse Row (CSR) or Yale format representation. We always use 0-based indexing! Let \(N\) = 5 denote the number of rows. The row_ptr array has \(N\) +1 elements, with element \(i\) denoting the start of row \(i\) in the col_ind and values arrays. Element row_ptr[N] = nnz, i.e., the total number of nonzero elements in the matrix. The values array holds the actual matrix values, ordered by row. The corresponding elements in col_ind give the column indices for each nonzero. There can be explicit zero elements in the matrix. The nonzero values and corresponding column indices need not be sorted by column (within a row).

The main steps are: create solver object, set options (parse options from the command line), set matrix, reorder, factor and finally solve. The matrix should be in the Compressed Sparse Row (CSR) format, also called Yale format, with 0 based indices. Figure 1 illustrates the CSR format. In the basic scenario, it is not necessary to explicitly call reorder and factor, since trying to solve with a StrumpackSparseSolver object that is not factored yet, will internally call the factor routine, which will call reorder if necessary.

The above code should be linked with -lstrumpack and with the Metis, ParMetis, Scotch, PT-Scotch, BLAS, LAPACK, and ScaLAPACK libraries.

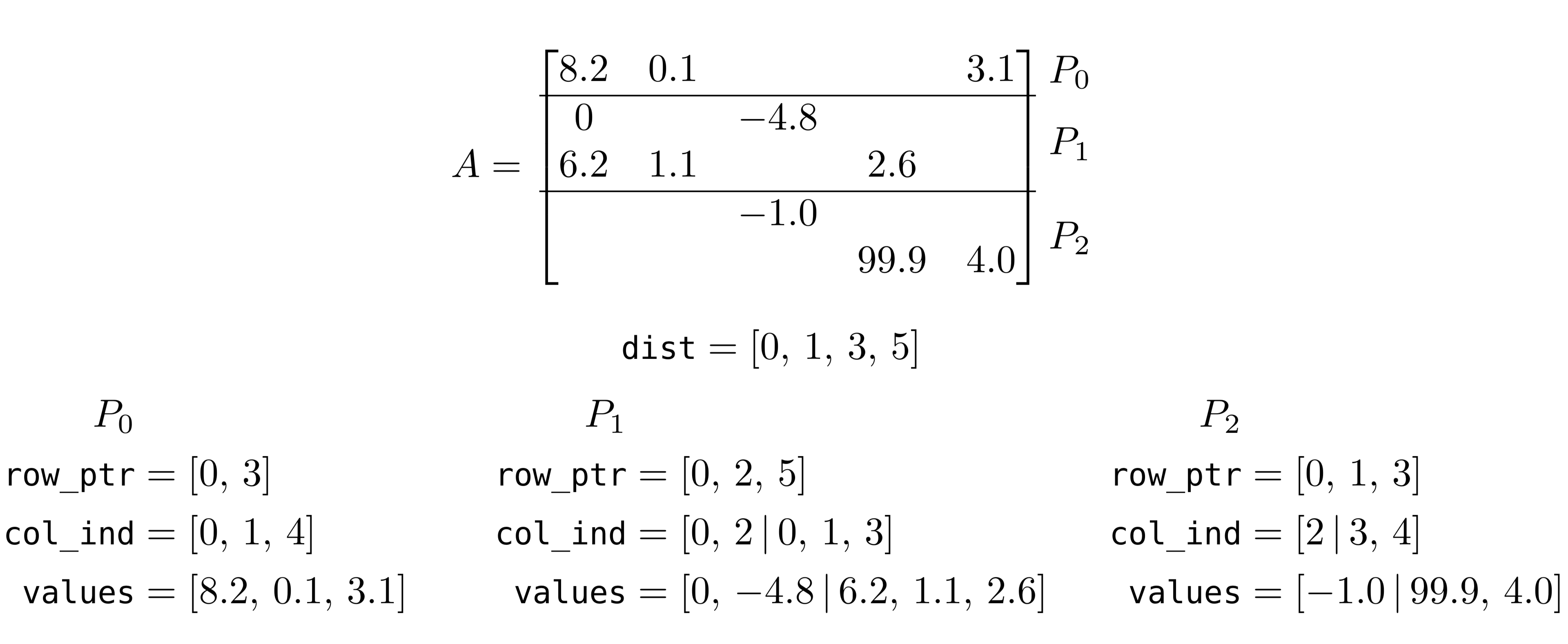

Finally, we illustrate the usage of StrumpackSparseSolverMPIDist<scalar,integer=int> solver. This interface takes a block-row distributed compressed sparse row matrix as input. This matrix format is illustrated in Figure 2 (below).

Figure 2: Illustration of a small 5×5 sparse matrix with 11 nonzeros and its block-row distributed compressed sparse row representation. We always use 0-based indexing! Process P0 owns row 0, process P1 has rows 1 and 2 and process P2 has rows 3 and 4. This distribution of rows over the processes is represented by the dist array. Process p owns rows [dist[p],dist[p+1]). If N = 5 is the number of rows in the entire matrix and P is the total number of processes, then dist[P]=N. The (same) dist array is stored on every process. Each process holds a CSR representation of only its local rows of the matrix, see Figure 1.

Let

Each of the solver classes has two constructors:

where argc and argv contain the command line options and the verbose option can be set to false to suppress output of the solver. Note that since SpMPIDist is a subclass of SpMPI, which is a subclass of Sp, all public members of Sp are also members of SpMPI and SpMPIDist. The public interface to the SpMPI class is exactly the same as that for the Sp class.

The sparse matrix should be specified in compressed sparse row format [8]:

Internally, the matrix is copied, so it will not be modified. Previous versions of STRUMPACK also supported the CSC format, but this is now deprecated. If the sparsity pattern of the matrix is symmetric (the values do not have to be symmetric), then you can set symmetric_pattern=true. This saves some work in the setup phase of the solver.

For the SpMPIDist solver the input is a block-row distributed compressed sparse row matrix (as illustrated in the example above):

Alternatively, you can also specify a sequential CSR matrix to the SpMPIDist solver:

For this routine, the matrix only needs to be specified completely on the root process. Other processes can pass NULL for the arrays.

Compute the factorization by calling

where the possible return values are the same as for Sp::reorder(). If Sp::reorder() was not called already, it is called automatically. When compression is not enabled, this will compute an exact LU factorization of the (permuted) sparse input matrix. If HSS/HODLR/BLR compression is enabled (for instance with SPOptions::set_compression() or --sp_compression BLR, see Preconditioning Preconditioning), the factorization is only approximate.

Solve the linear system \(Ax = b\) by calling

By default (bool use_initial_guess=false) the input in x is ignored. If bool use_initial_guess=true, x is used as initial guess for the iterative solver (if an iterative solver is used, for instance iterative refinement or GMRES). If the Sp::factor() was not called, it is called automatically. The return values are the same as for Sp::reorder().

The iterative solver can be chosen through:

where KrylovSolver can take the following values:

with KrylovSolver::AUTO being the default value. The KrylovSolver::AUTO setting will use iterative refinement when HSS compression is not enabled, and preconditioned GMRES when HSS compression is enabled, see HSS Preconditioning. To use the solver as a preconditioner, or a single (approximate) solve, set the solver to KrylovSolver::DIRECT. When calling SpMPIDist::solve, the right-hand side and solution vectors should only point to the local parts!

The STRUMPACK sparse solver applies three different matrix orderings

The reordering for numerical stability is performed using MC64 or Combinatorial BLAS. For many matrices, this reordering is not necessary and can safely be disabled! MC64 supports 5 different modes and there is one option to select the Combinatorial BLAS code:

which can be selected via

where matching() queries the currently selected strategy (the default is MAX_DIAGONAL_PRODUCT_SCALING maximum product of diagonal values plus row and column scaling). The command line option

can also be used, where the integers are defined as:

The MC64 code is sequential, so when using this option in parallel, the graph is first gathered to the root process. The Combinatorial BLAS code can currently only be used in parallel, and only with a square number of processes.

The STRUMPACK sparse solver supports both (Par)Metis and (PT-)Scotch for the matrix reordering. The following functions can set the preferred method or check the currently selected method:

The options for MatrixReorderingStrategy are

When the solver is an object of Sp, PARMETIS or PTSCOTCH are not supported. When the solver is parallel, either an SpMPI or SpMPIDist object, and METIS, SCOTCH or RCM are chosen, then the graph of the complete matrix will be gathered onto the root process and the root process will call the (sequential) Metis, Scotch or RCM reordering routine. For large graphs this might fail due to insufficient memory.

The GEOMETRIC option is only allowed for regular grids. In this case, the dimensions of the grid should be specified in the function

For instance for a regular 2d 2000 \(×\) 4000 grid, you can call this as sp.reorder(2000, 4000). In the general algebraic case, the grid dimensions don’t have to be provided. The reordering method can also be specified via the command line option

The sparse solver options are stored in an object of class SPOptions, which can be accessed through:

see also StrumpackOptions.hpp for several enumerations.

To get a list of all available options, make sure to pass “int argc, char* argv[]” when initializing the StrumpackSparseSolver or when calling SPOptions::set_from_command_line and run the application with –help or -h. Some default values listed here are for double precision and might be different when running in single precision.

STRUMPACK sparse solver options: