2D block cyclicly distributed matrix, as used by ScaLAPACK. More...

#include <DistributedMatrix.hpp>

Public Member Functions | |

| DistributedMatrix () | |

| DistributedMatrix (const BLACSGrid *g, int M, int N) | |

| DistributedMatrix (const BLACSGrid *g, int M, int N, const std::function< scalar_t(std::size_t, std::size_t)> &A) | |

| DistributedMatrix (const BLACSGrid *g, const DenseMatrix< scalar_t > &m) | |

| DistributedMatrix (const BLACSGrid *g, DenseMatrix< scalar_t > &&m) | |

| DistributedMatrix (const BLACSGrid *g, DenseMatrixWrapper< scalar_t > &&m) | |

| DistributedMatrix (const BLACSGrid *g, int M, int N, const DistributedMatrix< scalar_t > &m, int context_all) | |

| DistributedMatrix (const BLACSGrid *g, int M, int N, int MB, int NB) | |

| DistributedMatrix (const DistributedMatrix< scalar_t > &m) | |

| DistributedMatrix (DistributedMatrix< scalar_t > &&m) | |

| virtual | ~DistributedMatrix () |

| DistributedMatrix< scalar_t > & | operator= (const DistributedMatrix< scalar_t > &m) |

| DistributedMatrix< scalar_t > & | operator= (DistributedMatrix< scalar_t > &&m) |

| const int * | desc () const |

| bool | active () const |

| const BLACSGrid * | grid () const |

| const MPIComm & | Comm () const |

| MPI_Comm | comm () const |

| int | ctxt () const |

| int | ctxt_all () const |

| virtual int | rows () const |

| virtual int | cols () const |

| int | lrows () const |

| int | lcols () const |

| int | ld () const |

| int | MB () const |

| int | NB () const |

| int | rowblocks () const |

| int | colblocks () const |

| virtual int | I () const |

| virtual int | J () const |

| virtual void | lranges (int &rlo, int &rhi, int &clo, int &chi) const |

| const scalar_t * | data () const |

| scalar_t * | data () |

| const scalar_t & | operator() (int r, int c) const |

| scalar_t & | operator() (int r, int c) |

| int | prow () const |

| int | pcol () const |

| int | nprows () const |

| int | npcols () const |

| int | npactives () const |

| bool | is_master () const |

| int | rowl2g (int row) const |

| int | coll2g (int col) const |

| int | rowg2l (int row) const |

| int | colg2l (int col) const |

| int | rowg2p (int row) const |

| int | colg2p (int col) const |

| int | rank (int r, int c) const |

| bool | is_local (int r, int c) const |

| const scalar_t & | global (int r, int c) const |

| scalar_t & | global (int r, int c) |

| void | global (int r, int c, scalar_t v) |

| scalar_t | all_global (int r, int c) const |

| void | print () const |

| void | print (std::string name, int precision=15) const |

| void | print_to_file (std::string name, std::string filename, int width=8) const |

| void | print_to_files (std::string name, int precision=16) const |

| void | random () |

| void | random (random::RandomGeneratorBase< typename RealType< scalar_t >::value_type > &rgen) |

| void | zero () |

| void | fill (scalar_t a) |

| void | fill (const std::function< scalar_t(std::size_t, std::size_t)> &A) |

| void | eye () |

| void | shift (scalar_t sigma) |

| scalar_t | trace () const |

| void | clear () |

| virtual void | resize (std::size_t m, std::size_t n) |

| virtual void | hconcat (const DistributedMatrix< scalar_t > &b) |

| DistributedMatrix< scalar_t > | transpose () const |

| void | mult (Trans op, const DistributedMatrix< scalar_t > &X, DistributedMatrix< scalar_t > &Y) const |

| void | laswp (const std::vector< int > &P, bool fwd) |

| DistributedMatrix< scalar_t > | extract_rows (const std::vector< std::size_t > &Ir) const |

| DistributedMatrix< scalar_t > | extract_cols (const std::vector< std::size_t > &Ic) const |

| DistributedMatrix< scalar_t > | extract (const std::vector< std::size_t > &I, const std::vector< std::size_t > &J) const |

| DistributedMatrix< scalar_t > & | add (const DistributedMatrix< scalar_t > &B) |

| DistributedMatrix< scalar_t > & | scaled_add (scalar_t alpha, const DistributedMatrix< scalar_t > &B) |

| DistributedMatrix< scalar_t > & | scale_and_add (scalar_t alpha, const DistributedMatrix< scalar_t > &B) |

| real_t | norm () const |

| real_t | normF () const |

| real_t | norm1 () const |

| real_t | normI () const |

| virtual std::size_t | memory () const |

| virtual std::size_t | total_memory () const |

| virtual std::size_t | nonzeros () const |

| virtual std::size_t | total_nonzeros () const |

| void | scatter (const DenseMatrix< scalar_t > &a) |

| DenseMatrix< scalar_t > | gather () const |

| DenseMatrix< scalar_t > | all_gather () const |

| DenseMatrix< scalar_t > | dense_and_clear () |

| DenseMatrix< scalar_t > | dense () const |

| DenseMatrixWrapper< scalar_t > | dense_wrapper () |

| std::vector< int > | LU () |

| int | LU (std::vector< int > &) |

| DistributedMatrix< scalar_t > | solve (const DistributedMatrix< scalar_t > &b, const std::vector< int > &piv) const |

| void | LQ (DistributedMatrix< scalar_t > &L, DistributedMatrix< scalar_t > &Q) const |

| void | orthogonalize (scalar_t &r_max, scalar_t &r_min) |

| void | ID_column (DistributedMatrix< scalar_t > &X, std::vector< int > &piv, std::vector< std::size_t > &ind, real_t rel_tol, real_t abs_tol, int max_rank) |

| void | ID_row (DistributedMatrix< scalar_t > &X, std::vector< int > &piv, std::vector< std::size_t > &ind, real_t rel_tol, real_t abs_tol, int max_rank, const BLACSGrid *grid_T) |

| std::size_t | subnormals () const |

| std::size_t | zeros () const |

Static Public Attributes | |

| static const int | default_MB = STRUMPACK_PBLAS_BLOCKSIZE |

| static const int | default_NB = STRUMPACK_PBLAS_BLOCKSIZE |

2D block cyclicly distributed matrix, as used by ScaLAPACK.

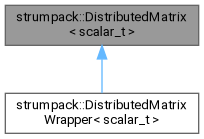

This class represents a 2D block cyclic matrix like used by ScaLAPACK. To create a submatrix of a DistributedMatrix use a DistributedMatrixWrapper.

Several routines in this class are collective on all processes in the process grid. The BLACSGrid passed when constructing the matrix should persist as long as the matrix is in use (in scope).

| scalar_t | Possible values for the scalar_t template type are: float, double, std::complex<float> and std::complex<double>. |

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | ) |

Default constructor. The grid will not be initialized.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| int | M, | ||

| int | N | ||

| ) |

Construct a Distributed matrix of size MxN on grid g, using the default blocksize strumpack::DistributedMatrix<scalar_t>::default_MB and strumpack::DistributedMatrix::default_NB. The values of the matrix are not set.

| g | BLACS grid. |

| M | number of (global) columns |

| N | number of (global) rows |

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| int | M, | ||

| int | N, | ||

| const std::function< scalar_t(std::size_t, std::size_t)> & | A | ||

| ) |

Construct a Distributed matrix of size MxN on grid g, using the default blocksize. The values of the matrix are initialized using the specified function.

| g | BLACS grid. |

| M | number of (global) columns |

| N | number of (global) rows |

| A | Routine to be used to initialize the matrix, by calling this->fill(A). |

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| const DenseMatrix< scalar_t > & | m | ||

| ) |

Create a DistributedMatrix from a DenseMatrix. This only works on a BLACS grid with a single process. Values will be copied from the input DenseMatrix into the new DistributedMatrix.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| DenseMatrix< scalar_t > && | m | ||

| ) |

Create a DistributedMatrix by moving from a DenseMatrix. This only works on a BLACS grid with a single process. The input DenseMatrix will be cleared.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| DenseMatrixWrapper< scalar_t > && | m | ||

| ) |

Create a DistributedMatrix by moving from a DenseMatrixWrapper. This only works on a BLACS grid with a single process. Values will be copied from the input DenseMatrix(Wrapper) into the new DistributedMatrix.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| int | M, | ||

| int | N, | ||

| const DistributedMatrix< scalar_t > & | m, | ||

| int | context_all | ||

| ) |

Construct a new DistributedMatrix as a copy of another. Sizes need to be specified since it can be that not all processes involved know the sizes of the source matrix. The input matrix might be on a different BLACSGrid.

| g | BLACS grid of newly to construct matrix |

| M | (global) number of rows of m, and of new matrix |

| N | (global) number of rows of m, and of new matrix |

| context_all | BLACS context containing all processes in g and in m.grid(). If g and m->grid() are the same, then this can be g->ctxt_all(). Ideally this is a BLACS context with all processes arranged as a single row, since the routine called from this constructor, P_GEMR2D, is more efficient when this is the case. |

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const BLACSGrid * | g, |

| int | M, | ||

| int | N, | ||

| int | MB, | ||

| int | NB | ||

| ) |

Construct a new MxN (global sizes) DistributedMatrix on processor grid g, using block sizes MB and NB. Matrix values will not be initialized.

Note that some operations, such as LU factorization require MB == NB.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | const DistributedMatrix< scalar_t > & | m | ) |

Copy constructor.

| strumpack::DistributedMatrix< scalar_t >::DistributedMatrix | ( | DistributedMatrix< scalar_t > && | m | ) |

Move constructor.

|

virtual |

Destructor.

|

inline |

| scalar_t strumpack::DistributedMatrix< scalar_t >::all_global | ( | int | r, |

| int | c | ||

| ) | const |

Return (on all ranks) the value at global position r,c. The ranks that are not active, i.e., not in the BLACSGrid will receive 0. Since the value at position r,c is stored on only one rank, this performs an (expensive) broadcast. So this should not be used in a loop.

| r | global row |

| c | global column |

|

inline |

Return number of block columns stored on this rank

|

inline |

|

inline |

|

inline |

|

inlinevirtual |

Get the number of global rows in the matrix

Reimplemented in strumpack::DistributedMatrixWrapper< scalar_t >.

|

inline |

|

inline |

|

inline |

Get the BLACS context from the grid.

|

inline |

Get the global BLACS context from the grid. The global context is the context that includes all MPI ranks from the communicator used to construct the grid.

|

inline |

Return raw pointer to local storage.

|

inline |

Return raw pointer to local storage.

|

inline |

Return the descriptor array.

|

inline |

Access global element r,c. This requires global element r,c to be stored locally on this rank (else it will result in undefined behavior or trigger an assertion). Requires is_local(r,c).

| r | global row |

| c | global column |

|

inline |

Access global element r,c. This requires global element r,c to be stored locally on this rank (else it will result in undefined behavior or trigger an assertion). Requires is_local(r,c).

| r | global row |

| c | global col |

|

inline |

Set global element r,c if global r,c is local to this rank, otherwise do nothing. This requires global element r,c to be stored locally on this rank (else it will result in undefined behavior or trigger an assertion). Requires is_local(r,c).

| r | global row |

| c | global column |

| v | value to set at global position r,c |

|

inline |

Get a pointer to the 2d processor grid used.

|

inlinevirtual |

Row index of top left element. This is always 1 for a DistributedMatrix. For a DistributedMatrixWrapper, this can be different from to denote the position in the original matrix.

Reimplemented in strumpack::DistributedMatrixWrapper< scalar_t >.

|

inline |

|

inline |

|

inlinevirtual |

Columns index of top left element. This is always 1 for a DistributedMatrix. For a DistributedMatrixWrapper, this can be different from to denote the position in the original matrix.

Reimplemented in strumpack::DistributedMatrixWrapper< scalar_t >.

|

inline |

Get the number of local columns in the matrix.

|

inline |

Get the leading dimension for the local storage.

|

virtual |

Get the ranges of local rows and columns. For a DistributedMatrix, this will simply be (0, lrows(), 0, lcols()). For a DistributedMatrixWrapper, this returns the local rows/columns of the DistributedMatrix that correspond the the submatrix represented by the wrapper. The values are 0-based

| rlo | output parameter, first local row |

| rhi | output parameter, one more than the last local row |

| clo | output parameter, first local column |

| chi | output parameter, one more than the last local column |

Reimplemented in strumpack::DistributedMatrixWrapper< scalar_t >.

|

inline |

Get the number of local rows in the matrix.

|

inline |

Get the row blocksize.

|

inline |

Get the column blocksize.

|

inline |

Get the number active processes in the process grid. This can be less than Comm().size(), but should be nprows()*npcols(). This requires grid() != NULL.

|

inline |

|

inline |

|

inline |

|

inline |

| DistributedMatrix< scalar_t > & strumpack::DistributedMatrix< scalar_t >::operator= | ( | const DistributedMatrix< scalar_t > & | m | ) |

Copy assignment.

| m | matrix to copy from |

| DistributedMatrix< scalar_t > & strumpack::DistributedMatrix< scalar_t >::operator= | ( | DistributedMatrix< scalar_t > && | m | ) |

Moce assignment.

| m | matrix to move from, will be cleared. |

|

inline |

|

inline |

|

inline |

Get the MPI rank in the communicator used to construct the grid corresponding to global row,column element. This assumes a column major ordering of the grid, see strumpack::BLACSGrid.

| r | global row |

| c | global column |

|

inline |

Return number of block rows stored on this rank

|

inline |

|

inline |

|

inline |

|

inlinevirtual |

Get the number of global rows in the matrix

Reimplemented in strumpack::DistributedMatrixWrapper< scalar_t >.

|

static |

Default row blocksize used for 2D block cyclic dustribution. This is set during CMake configuration.

The blocksize is set to a larger value when SLATE is used in order to achieve better GPU performance.

The default value is used when no blocksize is specified during matrix construction. Since the default value is a power of 2, some index mapping calculations between local/global can be optimized.

|

static |

Default columns blocksize used for 2D block cyclic dustribution. This is set during CMake configuration.