Performs LU factorization in parallel. More...

#include <math.h>#include "superlu_sdefs.h"#include "gpu_api_utils.h"#include "sscatter.c"#include "slook_ahead_update.c"#include "sSchCompUdt-2Ddynamic.c"

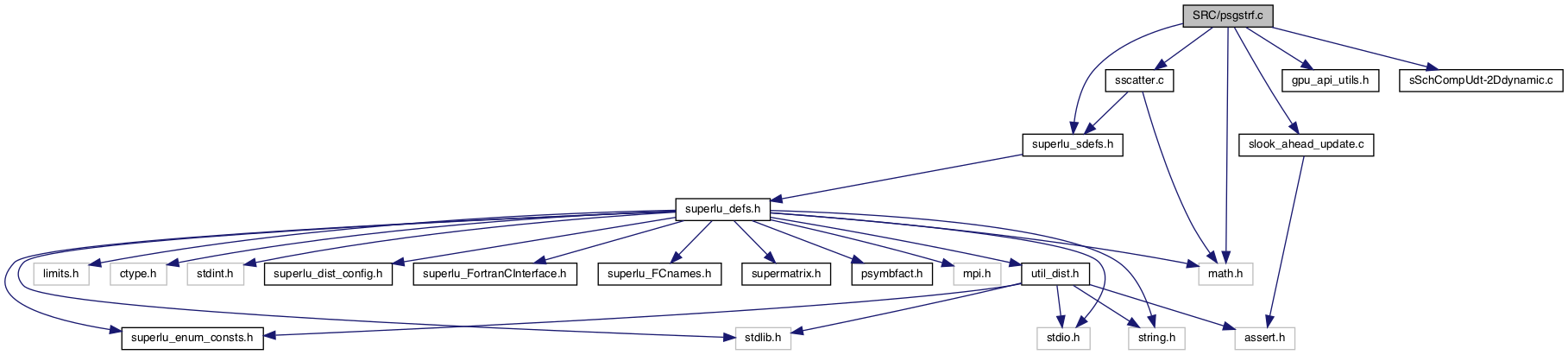

Include dependency graph for psgstrf.c:

Macros | |

| #define | PHI_FRAMEWORK |

| #define | CACHELINE 0 /* not worry about false sharing of different threads */ |

| #define | GEMM_PADLEN 8 |

| #define | PSGSTRF2 psgstrf2_trsm |

Functions | |

| int | superlu_sort_perm (const void *arg1, const void *arg2) |

| int_t | psgstrf (superlu_dist_options_t *options, int m, int n, float anorm, sLUstruct_t *LUstruct, gridinfo_t *grid, SuperLUStat_t *stat, int *info) |

Detailed Description

Performs LU factorization in parallel.

Copyright (c) 2003, The Regents of the University of California, through Lawrence Berkeley National Laboratory (subject to receipt of any required approvals from U.S. Dept. of Energy)

All rights reserved.

The source code is distributed under BSD license, see the file License.txt at the top-level directory.

-- Distributed SuperLU routine (version 9.0) -- Lawrence Berkeley National Lab, Univ. of California Berkeley. October 1, 2014 Modified: September 1, 1999 Feburary 7, 2001 use MPI_Isend/MPI_Irecv October 15, 2008 latency-reducing panel factorization July 12, 2011 static scheduling and arbitrary look-ahead March 13, 2013 change NTAGS to MPI_TAG_UB value September 24, 2015 replace xLAMCH by xMACH, using C99 standard. December 31, 2015 rename xMACH to xMACH_DIST. September 30, 2017 optimization for Intel Knights Landing (KNL) node . June 1, 2018 add parallel AWPM pivoting; add back arrive_at_ublock() February 8, 2019 version 6.1.1 Sketch of the algorithm ======================= The following relations hold: * A_kk = L_kk * U_kk * L_ik = Aik * U_kk^(-1) * U_kj = L_kk^(-1) * A_kj ---------------------------------- | | | ----|----------------------------- | | \ U_kk| | | | \ | U_kj | | |L_kk \ | || | ----|-------|---------||---------- | | | \/ | | | | | | | | | | | | | | | L_ik ==> A_ij | | | | | | | | | | | | | ---------------------------------- Handle the first block of columns separately. * Factor diagonal and subdiagonal blocks and test for exact singularity. ( psgstrf2(0), one column at a time ) * Compute block row of U * Update trailing matrix Loop over the remaining blocks of columns. mycol = MYCOL( iam, grid ); myrow = MYROW( iam, grid ); N = nsupers; For (k = 1; k < N; ++k) { krow = PROW( k, grid ); kcol = PCOL( k, grid ); Pkk = PNUM( krow, kcol, grid ); * Factor diagonal and subdiagonal blocks and test for exact singularity. if ( mycol == kcol ) { psgstrf2(k), one column at a time } * Parallel triangular solve if ( iam == Pkk ) multicast L_k,k to this process row; if ( myrow == krow && mycol != kcol ) { Recv L_k,k from process Pkk; for (j = k+1; j < N; ++j) if ( PCOL( j, grid ) == mycol && A_k,j != 0 ) U_k,j = L_k,k \ A_k,j; } * Parallel rank-k update if ( myrow == krow ) multicast U_k,k+1:N to this process column; if ( mycol == kcol ) multicast L_k+1:N,k to this process row; if ( myrow != krow ) { Pkj = PNUM( krow, mycol, grid ); Recv U_k,k+1:N from process Pkj; } if ( mycol != kcol ) { Pik = PNUM( myrow, kcol, grid ); Recv L_k+1:N,k from process Pik; } for (j = k+1; k < N; ++k) { for (i = k+1; i < N; ++i) if ( myrow == PROW( i, grid ) && mycol == PCOL( j, grid ) && L_i,k != 0 && U_k,j != 0 ) A_i,j = A_i,j - L_i,k * U_k,j; } }

Macro Definition Documentation

◆ CACHELINE

| #define CACHELINE 0 /* not worry about false sharing of different threads */ |

◆ GEMM_PADLEN

| #define GEMM_PADLEN 8 |

◆ PHI_FRAMEWORK

| #define PHI_FRAMEWORK |

◆ PSGSTRF2

| #define PSGSTRF2 psgstrf2_trsm |

Function Documentation

◆ psgstrf()

| int_t psgstrf | ( | superlu_dist_options_t * | options, |

| int | m, | ||

| int | n, | ||

| float | anorm, | ||

| sLUstruct_t * | LUstruct, | ||

| gridinfo_t * | grid, | ||

| SuperLUStat_t * | stat, | ||

| int * | info | ||

| ) |

Purpose

=======

PSGSTRF performs the LU factorization in parallel.

Arguments

=========

options (input) superlu_dist_options_t*

The structure defines the input parameters to control

how the LU decomposition will be performed.

The following field should be defined:

o ReplaceTinyPivot (yes_no_t)

= NO: do not modify pivots

= YES: replace tiny pivots by sqrt(epsilon)*norm(A) during

LU factorization.

m (input) int

Number of rows in the matrix.

n (input) int

Number of columns in the matrix.

anorm (input) float

The norm of the original matrix A, or the scaled A if

equilibration was done.

LUstruct (input/output) sLUstruct_t*

The data structures to store the distributed L and U factors.

The following fields should be defined:

o Glu_persist (input) Glu_persist_t*

Global data structure (xsup, supno) replicated on all processes,

describing the supernode partition in the factored matrices

L and U:

xsup[s] is the leading column of the s-th supernode,

supno[i] is the supernode number to which column i belongs.

o Llu (input/output) sLocalLU_t*

The distributed data structures to store L and U factors.

See superlu_sdefs.h for the definition of 'sLocalLU_t'.

grid (input) gridinfo_t*

The 2D process mesh. It contains the MPI communicator, the number

of process rows (NPROW), the number of process columns (NPCOL),

and my process rank. It is an input argument to all the

parallel routines.

Grid can be initialized by subroutine SUPERLU_GRIDINIT.

See superlu_ddefs.h for the definition of 'gridinfo_t'.

stat (output) SuperLUStat_t*

Record the statistics on runtime and floating-point operation count.

See util.h for the definition of 'SuperLUStat_t'.

info (output) int*

= 0: successful exit

< 0: if info = -i, the i-th argument had an illegal value

> 0: if info = i, U(i,i) is exactly zero. The factorization has

been completed, but the factor U is exactly singular,

and division by zero will occur if it is used to solve a

system of equations.

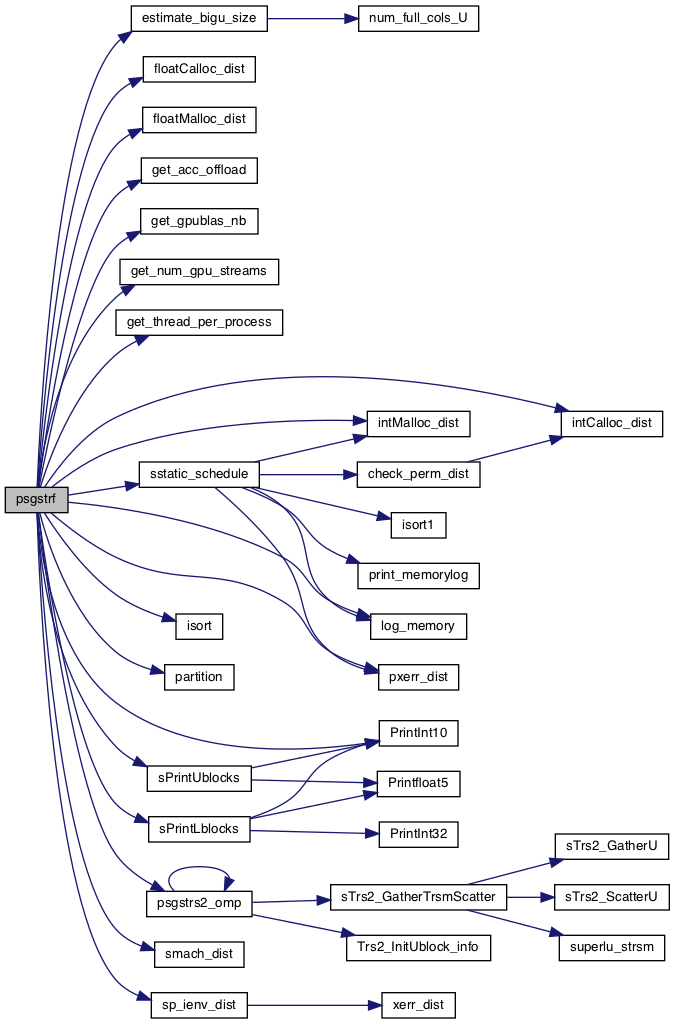

Here is the call graph for this function:

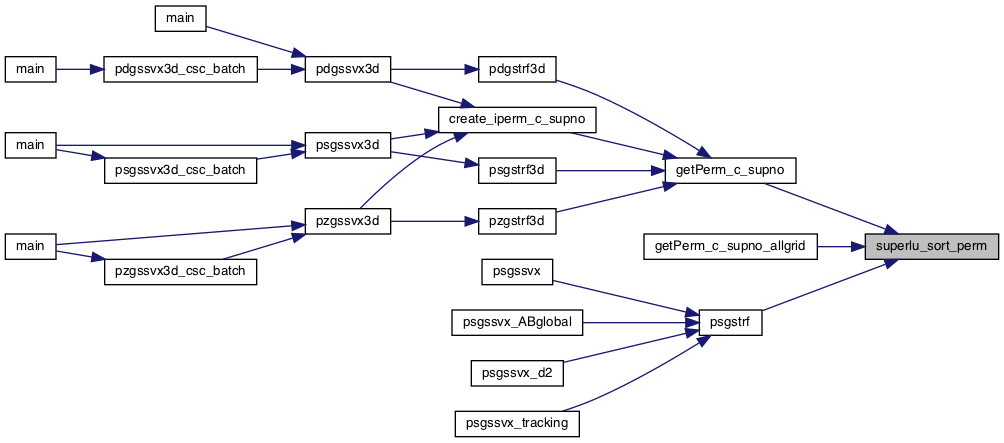

Here is the caller graph for this function:

◆ superlu_sort_perm()

| int superlu_sort_perm | ( | const void * | arg1, |

| const void * | arg2 | ||

| ) |

Here is the caller graph for this function: